Six years ago we took a look at 64bit benchmarking and provided some examples of why 64bit can give better performance than 32bit.

What we found at the time was that a 64bit CPU, running a 64bit O/S, executing 64bit code could in some cases be twice as quick as 32bit code.

We are now at the point where we are doing research into PerformanceTest V8, and since the initial study many new CPUs have been released and the difference between 32bit/64bit performance has grown. In PerformanceTest V7 some CPUs now get up to 4 - 6 times the performance in 64bit integer maths, compared to 32bit.

Six times the performance is an enormous difference. So we decided to dig a bit deeper to see what was going on.

In PerformanceTest V7 the integer maths test is made up of 8 individual mathematical operations performed in equal numbers. These are,

The first four operations are unsurprisingly executed in the same way and at the speed on a 32bit machine and a 64bit machine.

The second four 64bit operations are executed at a much quicker speed on a 64bit machine. See the above referenced post for details.

What we have found in more recent testing however is also interesting.

The first interesting point is that division of 32bit numbers is pretty much always around four times slower than Add, Subtract or Multiply. This isn't news, as it is well known that division is a harder operation to do.

What was more interesting was that 64bit division was way way slower than 32bit division. And doing 64bit division on a 32bit system was extremely expensive, showing a fourteen fold performance drop going to 64bit numbers. This more than anything else accounts for why the PerformanceTest V7 integer maths test does so well on 64bit compared to 32bit.

The second interesting point is that some of the newest CPUs have got significantly faster in 64bit division. For example the AMD A8-3850 & A6-3650. This has really lifted their results in this test.

The lessons in this (for us) are that V7 integer maths test places too much weight on the speed 64bit division can be performed. The same is also true for 32bit division and multiplication to a lessor extent. More weight should be give to the other operations, Add, Subtract, etc.. This would moderate the differences between CPU types and also reduce the large differences between 32bit and 64bit.

Because the division operation is so slow compare to the other operations and the weighting of each operation was equal, the V7 integer maths test has become largely a test of how fast the CPU can do division. Making it a rather narrow unrealistic test of a CPU.

So in V8 we plan to reduce the number of division operations performed and also introduce some additional variety into the test in the form of logic operations like bit shifting & increment instructions.

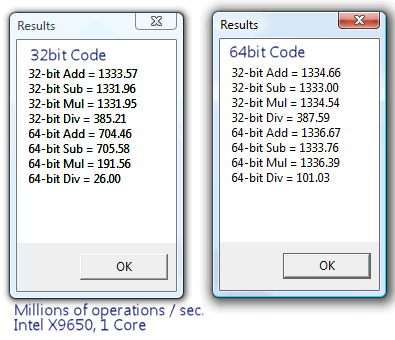

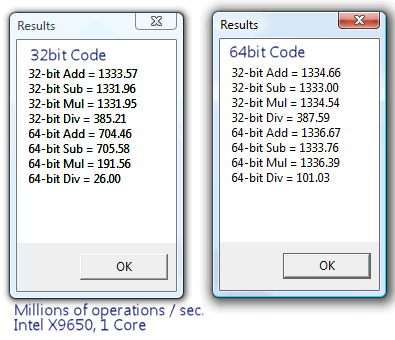

Here are the actual V7 numbers from an Intel X9650 CPU, running both 32bit code and 64bit code. Higher numbers are better.

You might be wondering just why doing 64bit division on a 32bit system is so slow. The reason is that A) There is no native machine code instruction for dealing with any 64bit numbers on a 32bit system and B) The calculations to perform a 64bit division are rather complex on a 32bit system. Each division takes dozens of steps to complete for the CPU.

Update: Here is a link to the PT8 development thread.

What we found at the time was that a 64bit CPU, running a 64bit O/S, executing 64bit code could in some cases be twice as quick as 32bit code.

We are now at the point where we are doing research into PerformanceTest V8, and since the initial study many new CPUs have been released and the difference between 32bit/64bit performance has grown. In PerformanceTest V7 some CPUs now get up to 4 - 6 times the performance in 64bit integer maths, compared to 32bit.

Six times the performance is an enormous difference. So we decided to dig a bit deeper to see what was going on.

In PerformanceTest V7 the integer maths test is made up of 8 individual mathematical operations performed in equal numbers. These are,

- Addition of two 32bit numbers

- Subtraction of two 32bit numbers

- Multiplication of two 32bit numbers

- Division of two 32bit numbers

- Addition of two 64bit numbers

- Subtraction of two 64bit numbers

- Multiplication of two 64bit numbers

- Division of two 64bit numbers

The first four operations are unsurprisingly executed in the same way and at the speed on a 32bit machine and a 64bit machine.

The second four 64bit operations are executed at a much quicker speed on a 64bit machine. See the above referenced post for details.

What we have found in more recent testing however is also interesting.

The first interesting point is that division of 32bit numbers is pretty much always around four times slower than Add, Subtract or Multiply. This isn't news, as it is well known that division is a harder operation to do.

What was more interesting was that 64bit division was way way slower than 32bit division. And doing 64bit division on a 32bit system was extremely expensive, showing a fourteen fold performance drop going to 64bit numbers. This more than anything else accounts for why the PerformanceTest V7 integer maths test does so well on 64bit compared to 32bit.

The second interesting point is that some of the newest CPUs have got significantly faster in 64bit division. For example the AMD A8-3850 & A6-3650. This has really lifted their results in this test.

The lessons in this (for us) are that V7 integer maths test places too much weight on the speed 64bit division can be performed. The same is also true for 32bit division and multiplication to a lessor extent. More weight should be give to the other operations, Add, Subtract, etc.. This would moderate the differences between CPU types and also reduce the large differences between 32bit and 64bit.

Because the division operation is so slow compare to the other operations and the weighting of each operation was equal, the V7 integer maths test has become largely a test of how fast the CPU can do division. Making it a rather narrow unrealistic test of a CPU.

So in V8 we plan to reduce the number of division operations performed and also introduce some additional variety into the test in the form of logic operations like bit shifting & increment instructions.

Here are the actual V7 numbers from an Intel X9650 CPU, running both 32bit code and 64bit code. Higher numbers are better.

You might be wondering just why doing 64bit division on a 32bit system is so slow. The reason is that A) There is no native machine code instruction for dealing with any 64bit numbers on a 32bit system and B) The calculations to perform a 64bit division are rather complex on a 32bit system. Each division takes dozens of steps to complete for the CPU.

Update: Here is a link to the PT8 development thread.

Comment