AMD's new ThreadRipper CPUs actually contains two CPU modules. Which means two separate memory busses.

As a result of this, there is the possibility of Non-Uniform Memory Access (NUMA). Which means that some of the time CPU 0 might use it's own RAM, and some of the time CPU 0 might need to use the RAM connected to CPU 1. In theory using the RAM directly connected to your own CPU should be faster, and using the more remote RAM should be slow.

We set out to test this using PerformanceTest's advanced memory test today on a ThreadRipper 1950X. Memory in use was Corsair cmk32gx4m2a2666c16 (DDR4, 2666Mhz, 16-18-18-35, 4 x 16GB).

Note that you need PerformanceTest Version 9.0 build 1022 (20/Dec/2017) or higher to do this. In previously releases the advanced memory test was not NUMA aware (or had NUMA related bugs).

Graphs are below, but the summary is:

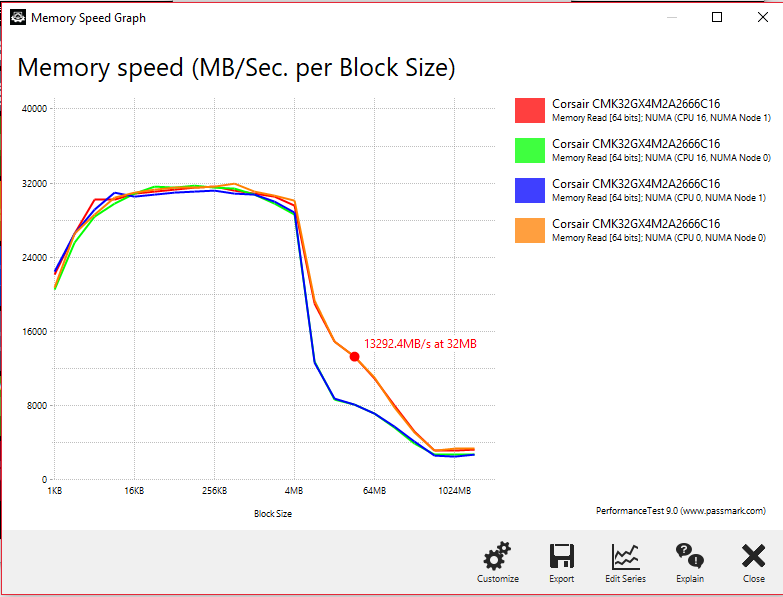

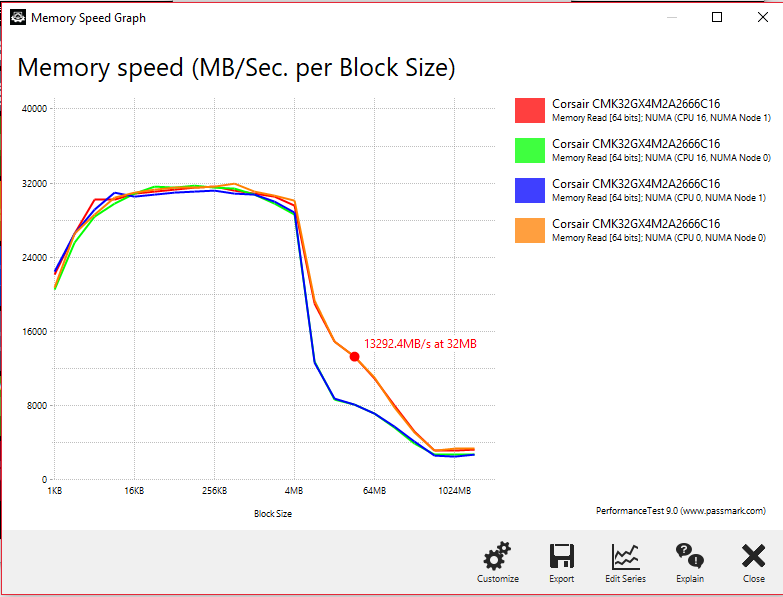

1) For sequential reading of memory, there is no significant performance difference for small blocks of RAM, but for larger blocks, that fall outside of the CPU's cache, the performance different can be up to 60%. (this test corresponds to our "memory speed per block size" test in PerformanceTest). So having NUMA aware applications is important for a system like this.

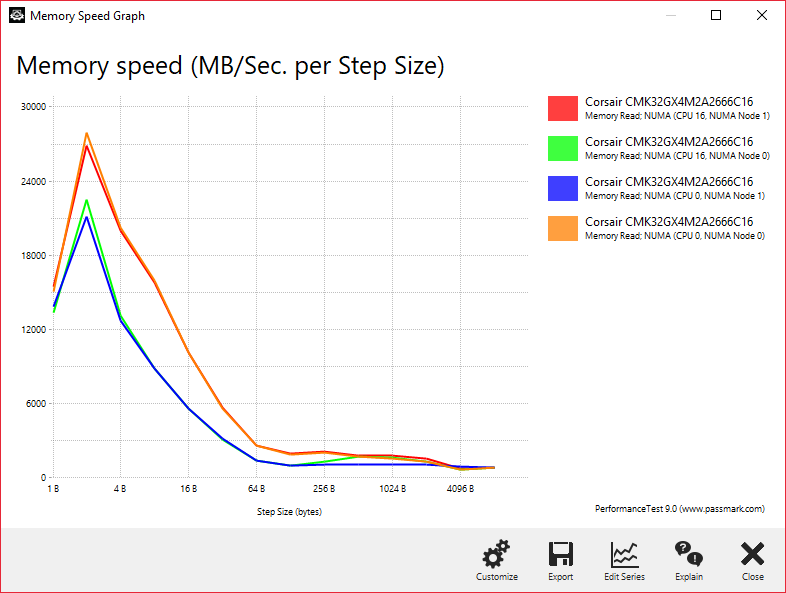

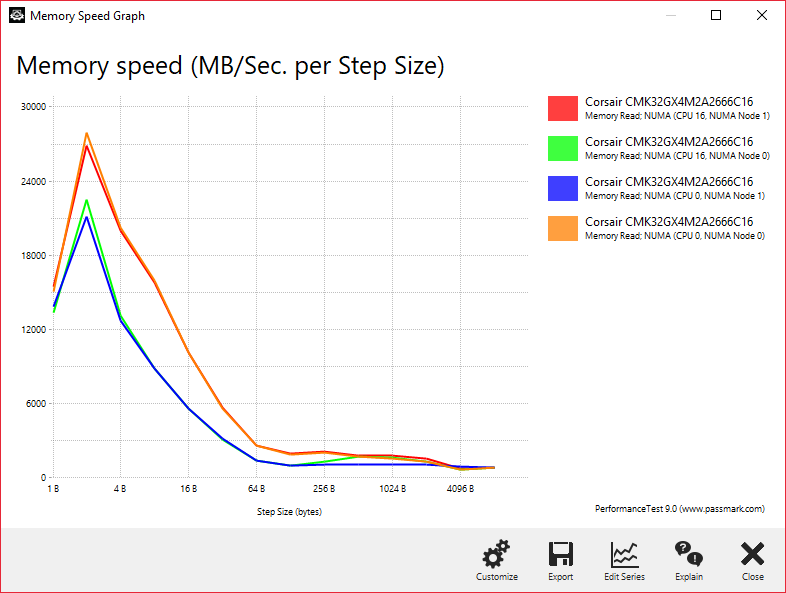

2) For non sequential read, where we skip forward by some step factor before reading the next value, there is a significant performance hit. (this corresponds to our "memory speed per step size" test). We suspect this is due to the cache being a lot less effective. Performance degradation was around 20%.

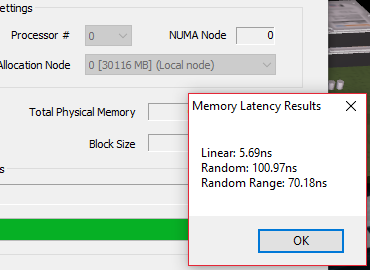

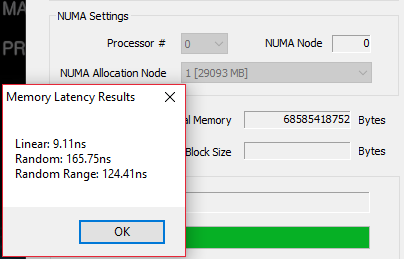

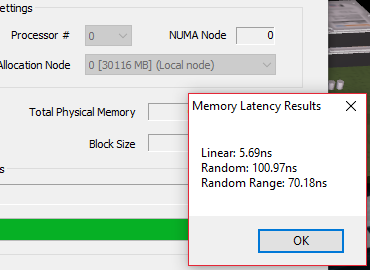

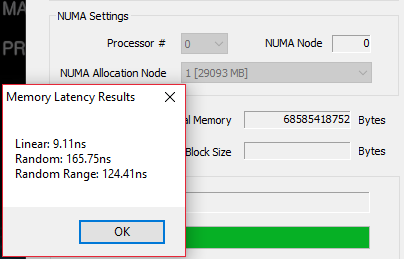

3) Latency is higher for distant nodes, compared to accessing memory on the local node. So memory access are around 60% slower. Again showing why NUMA aware applications (and operating systems) are important. What we did notice however is that if we didn't explicitly select the NUMA node, most of the time the system itself seemed to select the best node anyway (using malloc() on Win10). We don't know if this was by design, or just good luck.

Note: AMD's EYPC CPUs should behave the same, but we only have ThreadRipper system to play with.

Latency results - Same NUMA node

Latency results - Remote NUMA node

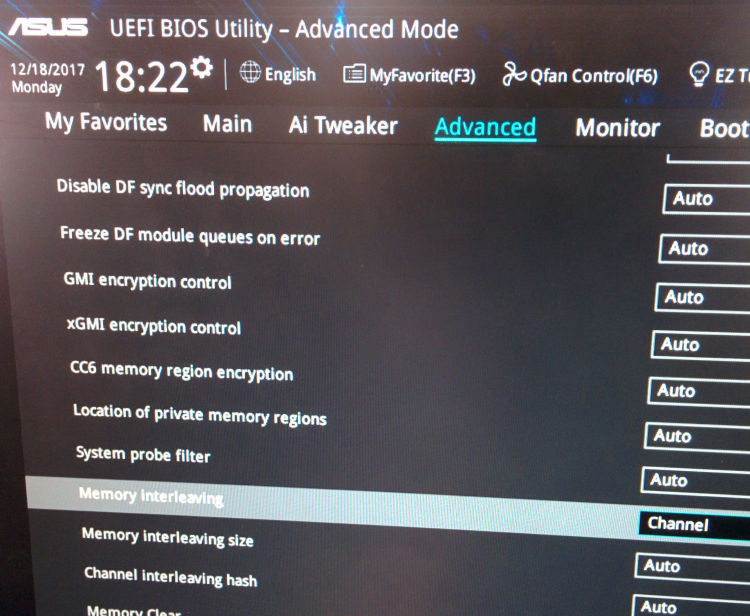

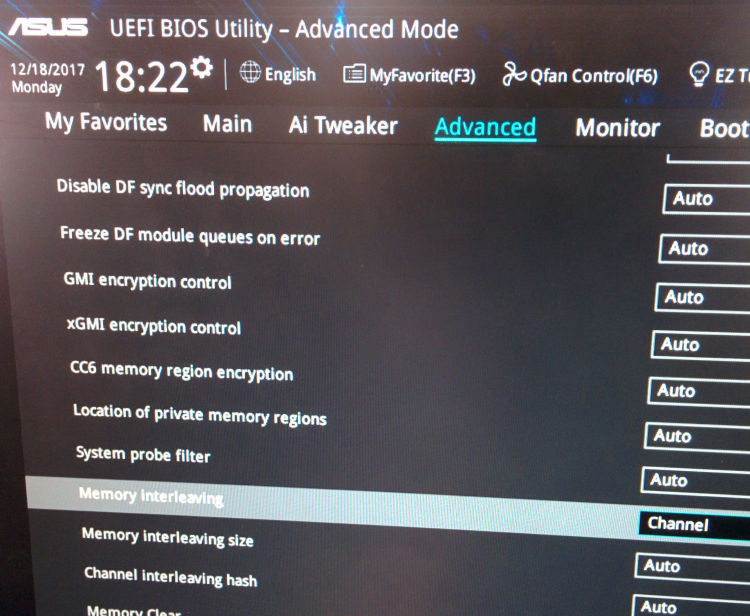

Also it is worth noting that the default BIOS setup didn't enable NUMA (ASUS motherboard). It had to be manually enabled

This was done in the advanced mode, under "DF common options" by selecting the "memory interleaving" settings and changing this from "Auto" to "Channel".

As a result of this, there is the possibility of Non-Uniform Memory Access (NUMA). Which means that some of the time CPU 0 might use it's own RAM, and some of the time CPU 0 might need to use the RAM connected to CPU 1. In theory using the RAM directly connected to your own CPU should be faster, and using the more remote RAM should be slow.

We set out to test this using PerformanceTest's advanced memory test today on a ThreadRipper 1950X. Memory in use was Corsair cmk32gx4m2a2666c16 (DDR4, 2666Mhz, 16-18-18-35, 4 x 16GB).

Note that you need PerformanceTest Version 9.0 build 1022 (20/Dec/2017) or higher to do this. In previously releases the advanced memory test was not NUMA aware (or had NUMA related bugs).

Graphs are below, but the summary is:

1) For sequential reading of memory, there is no significant performance difference for small blocks of RAM, but for larger blocks, that fall outside of the CPU's cache, the performance different can be up to 60%. (this test corresponds to our "memory speed per block size" test in PerformanceTest). So having NUMA aware applications is important for a system like this.

2) For non sequential read, where we skip forward by some step factor before reading the next value, there is a significant performance hit. (this corresponds to our "memory speed per step size" test). We suspect this is due to the cache being a lot less effective. Performance degradation was around 20%.

3) Latency is higher for distant nodes, compared to accessing memory on the local node. So memory access are around 60% slower. Again showing why NUMA aware applications (and operating systems) are important. What we did notice however is that if we didn't explicitly select the NUMA node, most of the time the system itself seemed to select the best node anyway (using malloc() on Win10). We don't know if this was by design, or just good luck.

Note: AMD's EYPC CPUs should behave the same, but we only have ThreadRipper system to play with.

Latency results - Same NUMA node

Latency results - Remote NUMA node

Also it is worth noting that the default BIOS setup didn't enable NUMA (ASUS motherboard). It had to be manually enabled

This was done in the advanced mode, under "DF common options" by selecting the "memory interleaving" settings and changing this from "Auto" to "Channel".

Comment